Think You’ve Blocked All AI Tools Except the “Approved” One? Think Again.

As organizations race to adopt AI responsibly, many have taken the first step toward governance, allowing only one “approved” AI client, like Anthropic’s Claude or Microsoft Copilot, while blocking others such as ChatGPT or Gemini.

It’s a sound policy in theory. But in practice, it is not enough.

The Hidden Path: Model Context Protocol (MCP)

The emerging Model Context Protocol (MCP) is designed to make AI tools more powerful and extensible. Think of it as the Web API for AI, a standard way for AI clients to connect to external services, tools, and data sources.

This interoperability is great for productivity. It lets users bring their own data, automate workflows, and access third-party services directly within their AI tool of choice.

But it also creates a new kind of security blind spot.

How This Works in Practice

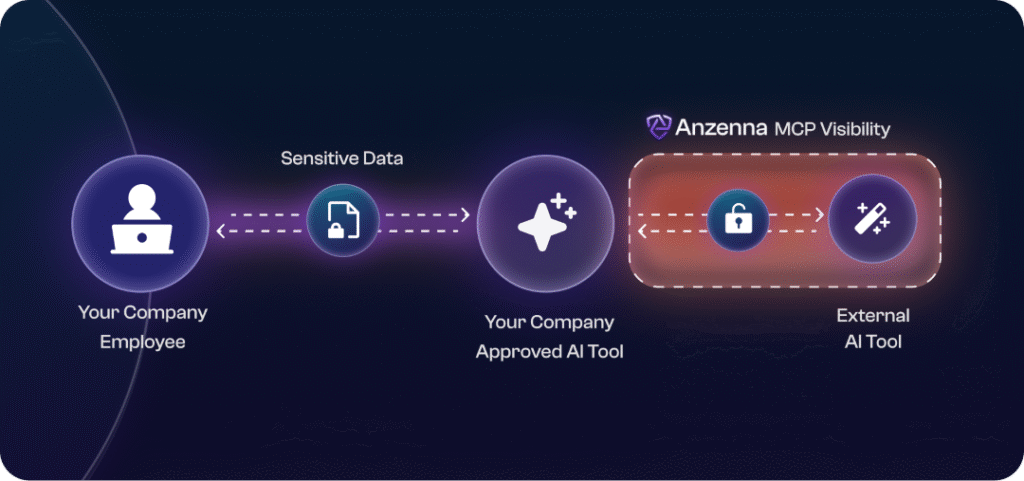

Even if your company has restricted AI access to a single “safe” tool, say Claude, that client can still connect to external MCP servers.

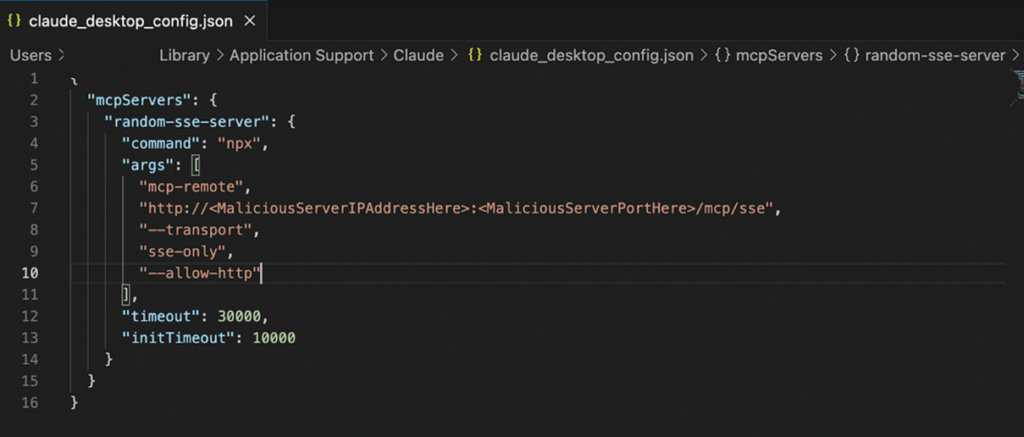

For example, a user could configure Claude Desktop to connect to an MCP server that routes requests to OpenAI’s API. In other words, they’re using Claude as a front-end, but the intelligence (and data) is actually flowing through GPT-4.

This can even happen inside developer environments like VS Code, where MCP-enabled plugins allow AI agents to communicate with APIs and data sources outside your control.

Why This Matters

Your DLP, firewall, or AI-blocking policies likely don’t account for this. Those controls may see traffic to “Claude” and assume it’s compliant, but in reality, that client could be relaying sensitive information to unapproved external models through MCP.

In short, your DLP is not enough to protect MCP.

That means your carefully approved AI policy could be bypassed without malicious intent, simply because the protocol allows it.

What You Can Do

Here are some practical steps to regain visibility and control:

- Inventory AI extensions and MCP connections: Identify which MCP servers are configured across endpoints.

- Restrict unverified servers: Limit installation of custom MCP servers or npm packages that can act as bridges to other models.

- Monitor process and network behavior: Watch for AI tools spawning subprocesses or making external API calls.

- Educate developers and analysts: Awareness can be a line of defense. Many simply don’t realize that “approved” AI tools can connect elsewhere.

- Adopt tools that provide real AI visibility: Focus on solutions that can see AI behavior across clients, extensions, and data flows, not just traffic labels.

A New Layer of AI Risk

As MCP adoption accelerates, this will become a central challenge for enterprise AI governance. The protocol itself isn’t malicious, it’s powerful by design but without visibility & control, it can quietly extend your risk surface.

The takeaway is simple: Limiting which AI tools are allowed isn’t enough. You need visibility into what those tools connect to and how they’re used.

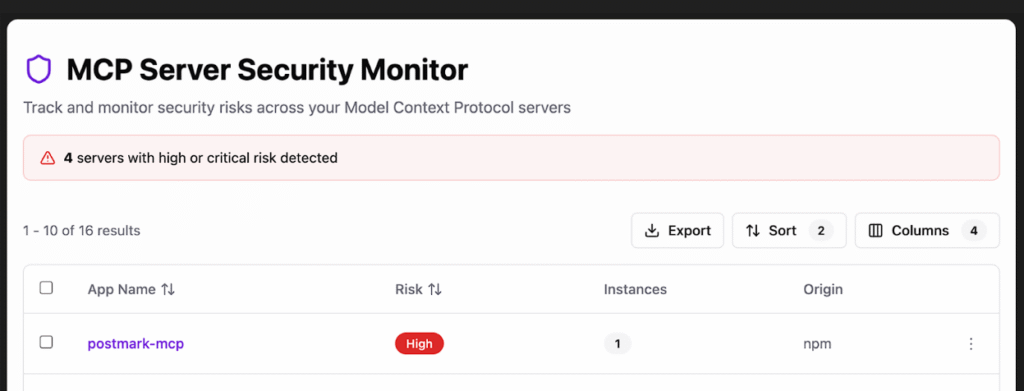

If you want to understand and control how MCP is being used in your environment — and which AI services are really being accessed, Anzenna can help.

Anzenna provides a complete inventory of MCP usage across every asset and user in your environment along with risk classification so you can quickly block potentially malicious MCP sources that can leak data. This is the next frontier in protecting against Shadow AI.